Source: Simon Walkowiak — Big data analysis and visualisations

Quality and Credibility Metrics of Online Entities: Academic Review

Quality Vs Credibility of Online Entities

Credibility

The quality of being believable or worthy of trust.

Credibility challenges according to Stanford Web Credibility Research:

- What causes people to believe (or not believe) what they find on the Web?

- What strategies do users employ in evaluating the credibility of online sources?

- What contextual and design factors influence these assessments and strategies?

- How and why are credibility evaluation processes on the Web different from those made in face-to-face human interaction, or in other offline contexts?

P.h.D Thesis: How Do People Evaluate a Web Site’s Credibility?

Quality

The totality of features and characteristics of a product or service that bear on its ability to satisfy stated or implied needs.

- degree of excellence or fitness for use

Is quality an indicator of credibility or vice versa?

User Generated Content: How Good is It? Slide

- How can we estimate the quality of UGC?

- Directly evaluate the quality.

- What are the elements of social media that can be used to facilitate automated discovery of high-quality content?

- What is the utility of links between items, quality rating from members of the community, and other non-content information to the task of estimating the quality of UGC?

- How are these different factors related?

- Is content alone enough for identifying high-quality items?

- Can community feedback approximate judgments of specialists?

- In this work, the authors used a judged question/answer collection where good questions usually have good answers to model a classifier to predict good questions and good answers, obtaining an AUC (area under the curve of the precision-recall graph) of 0.76 and 0.88, respectively.

- The drawback is that the quality gap is balanced by volume. The larger the volume of the UGC, the lower difficult the quality evaluation.

- Obtaining indirect evidence of the quality.

- use UGC for a given task and then evaluate the quality of the task results.

- evaluation of the quality of extraction of semantic relations using the Open Directory Project (ODP). Precision of over 60%.

- Crossing different UGC sources and infer from there the quality of those sources.

- using collective knowledge (wisdom of crowds) to extend image tags, and prove that almost 70% of the tags can be semantically classified by using Wordnet and Wikipedia.

- Directly evaluate the quality.

The Online Entities Quality Challenge

Entities: social media platforms (Facebook, Twitter, ….) or information systems, and information or contents on the internet (articles: posts, comments, …).

The advent and openness of online social media platforms often leaves them highly susceptible to abuse by suspicious entities. It therefore becomes increasingly important to automatically identify these suspicious entities and mitigate/eliminate their threats.

Anomaly Detection on Social Data: P.h.D Thesis

The rapid growth of the Internet and the lack of enforceable standards regarding the information it contains has lead to numerous information quality problems.

- inability of Search Engines to wade through the vast expanse of questionable content and return “quality” results to a user’s query

Developing a Framework for Assessing Information Quality on the World Wide Web

Fundamental Definitions

“Data Quality” is described as data that is “Fit-for-use”: data considered appropriate for one use may not possess sufficient attributes for another use!

Common Dimensions of Information or Data Quality

- Accuracy: extent to which data are correct, reliable and certified free of error

- Consistency: extent to which information is presented in the same format and compatible with previous data

- Security: extent to which access to information is restricted appropriately to maintain its security

- Timeliness: extent to which the information is sufficiently up-to-date for the task at hand

- Completeness: extent to which information is not missing and is of sufficient breadth and depth for the task at hand

- Concise: extent to which information is compactly represented without being overwhelming (i.e. brief in presentation, yet complete and to the point)

- Reliability: extent to which information is correct and reliable

- Accessibility: extent to which information is available, or easily and quickly retrievable

- Availability: extent to which information is physically accessible

- Objectivity: extent to which information is unbiased, unprejudiced and impartial

- Relevancy: extent to which information is applicable and helpful for the task at hand

- Useability: extent to which information is clear and easily used

- Understandability: extent to which data are clear without ambiguity and easily comprehended

- Amount of data: extent to which the quantity or volume of available data is appropriate

- Believability: extent to which information is regarded as true and credible

- Navigation: extent to which data are easily found and linked to

- Reputation: extent to which information is highly regarded in terms of source or content

- Useful: extent to which information is applicable and helpful for the task at hand

- Efficiency: extent to which data are able to quickly meet the information needs for the task at hand

- Value-Added: extent to which information is beneficial, provides advantages from its use

These attributes of data quality can vary depending on the context in which the data is to be used.

Defining what Information Quality means in the context of Search Engines will depend greatly on whether dimensions are being identified for the producers of information, the storage and maintenance systems used for information, or for the searchers and users of information.

- Consider the information user, quality dimensions of their interest include relevancy and usefulness. These dimensions are enormously important but extremely difficult to gauge.

Developing a Framework for Assessing Information Quality on the World Wide Web

Quality Metrics

Metrics for IQ in Information Retrieval

Metrics that can assess IQ and can be deployed in Search engines

- Six quality metrics: currency, availability, information-to-noise ratio, authority, popularity, and cohesiveness.

- Factual density measure: a simple statistical quality measure that is based on facts extracted from Web content. Evaluated on Wikipedia articles.

- Seven Wikipedia IQ metrics

Quality Datasets

Thesis and Dissertation Hubs

– OATD

– EThOS

– PQDOPEN

– ProQuest

– Queens

– CORE

– DiVA

– ETDs

– Ebook

– Dart Europe

– OhioLINK

– UM Repository

Credit: Khalid Kyle

Here is the list of websites that i used to get ebook, thesis & dissertation for free in writing my thesis:

– OATD

– EThOS

– PQDOPEN

– ProQuest

– Queens

– CORE

– DiVA

– ETDs

– Ebook

– Dart Europe

– OhioLINK

– UM Repository

Here is the list of websites that i used to get ebook, thesis & dissertation for free in writing my thesis:

– OATD

– EThOS

– PQDOPEN

– ProQuest

– Queens

– CORE

– DiVA

– ETDs

– Ebook

– Dart Europe

– OhioLINK

– UM Repository

Codes of the Week

Kaggle: GOP Debate Twitter Sentiment Analysis

The original challenge was to understand what people thought (based on Twitter discussions) of the US Republican Debate that took place in Cleveland. The dataset consist of an annotated 20,000 randomly selected tweets from that night using #GOPDebate and related hashtags. The annotation was carried out by contributors based on the following guidelines:

- Is this tweet relevant and from a person? not from news outlet or a brand

- What candidate was mentioned in the tweet? this include “no candidate option”

- What subject was mentioned in the tweet? several options provided

- What was the sentiment of the tweet? +ive, -ive and neutral

Questions of interest:

- What issues resonated with voters?

- Which candidates were viewed most negatively?

- Are people really considering voting for a well-monied, sentient toupee?

Links

- Using machine learning to analyze the GOP debate

- What the GOP Debate taught us about machine learning

Guide to Data Science Competitions

Summer is finally here and so are the long form virtual hackathons. Unlike a traditional hackathon, which focus on what you can build in one place in one limited time span, virtual hackathons typically give you a month or more to work from where ever you like.

Summer is finally here and so are the long form virtual hackathons. Unlike a traditional hackathon, which focus on what you can build in one place in one limited time span, virtual hackathons typically give you a month or more to work from where ever you like.

And for those of us who love data, we are not left behind. There are a number of data science competitions to choose from this summer. Whether it’s a new Kaggle challenge (which are posted year round) or the data science component of Challenge Post’s Summer Jam Series, there are plenty of opportunities to spend the summer either sharpening or showing off your skills.

The Landscape: Which Competitions are Which?

- Kaggle

Kaggle competitions have corporate sponsors that are looking for specific business questions answered with their sample data. In return, winners are rewarded handsomely, but you have to win first. - Summer Jam

View original post 313 more words

Ph.D. Discussions on Quora

I came across some interesting Ph.D. research related topics on Quora that attempts to measure the quality and satisfaction of a P.h.D programme. Some of the questions that captured my attention include:

- Q: Is there anyone out there who really enjoyed or is enjoying their time as a Ph.D. student?

- A: Yes, very many! with a great advisor, great research area, working on the right problems at the right time, etc. Ph.D. students need to be driven to learn and try new things, to not settle, and to find your their own identity as researchers.

- Q: What are the metrics for measuring the quality of a Ph.D. program?

- A: metrics that are focused on the process of the Ph.D.

- Grant funding received by department

- Number of publications by student at graduation

- Number of 1st author papers at graduation by student

- Awards (both faculty and student awards like)

- Fraction that gets academic jobs (Postdocs count in this number)

- A: metrics that are focused on the Ph.D. student.

- Did the student receive a job in the field they wanted?

- Was the research they produced impactful (citations, not just #)

- Was the student satisfied with their experience?

- Do students feel well prepared when they join their next job? (academic or otherwise).

- A: metrics that are focused on the process of the Ph.D.

- Q: I have spent one year in my Ph.D. program and haven’t published anything. What should I do?

- A: What is the point of writing a paper that nobody reads? It is better to keep learning new things and keep asking the most interesting questions. When you finally publish your first paper, it will be amazing and worth 20 mediocre papers. The impact is what matters, not publication count. The hard stuff takes some time to come together into a quality publication.

Measuring the Quality of Interesting Entities on the Web

Quality is defined in Wikipedia as the standard of something as measured against other things of a similar kind; the degree of excellence of something.

Today’s information and data pools on the Web focus on the quantity of information rather than its quality.

The assessment of the quality of information is especially important because decisions are often based on information from multiple and sometimes unknown sources, though, the reliability and accuracy of the information is questionable.

However, the web lacks quality dependent filter mechanisms, automatic identification of misuse patterns, as well as tools to establish user trust in information and authors.

Hence the need to develop mechanisms for estimating the quality of textual Web documents and to evaluate these mechanisms for their effectiveness and efficiency.

My entities of interest include Websites, news feeds, social media feeds, digital adverts, and other Web articles. Other important entities include air, water, soil, and life.

Large scale machine learning is playing an increasingly important role in improving the quality and monetisation of Internet properties. A small number of techniques, such as regression, have proven to be widely applicable across Internet properties and applications.

My Research and Publication Platforms

My Journal Platforms of Interest

Computational Social Networks

Focus on common principles, algorithms and tools that govern network structures/topologies, network functionalities, security and privacy, network behaviors, information diffusions and influence, social recommendation systems which are applicable to all types of social networks and social media. Topics include (but are not limited to) the following:

- Social network design and architecture

- Mathematical modeling and analysis

- Real-world complex networks

- Information retrieval in social contexts, political analysts

- Network structure analysis

- Network dynamics optimization

- Complex network robustness and vulnerability

- Information diffusion models and analysis

- Security and privacy

- Searching in complex networks

- Efficient algorithms

- Network behaviors

- Trust and reputation

- Social Influence

- Social Recommendation

- Social media analysis

- Big data analysis on online social networks

Journal of Big Data

The journal examines the challenges facing big data today and going forward including, but not limited to: data capture and storage; search, sharing, and analytics; big data technologies; data visualization; architectures for massively parallel processing; data mining tools and techniques; machine learning algorithms for big data; cloud computing platforms; distributed file systems and databases; and scalable storage systems.

Open article collectionRead More »

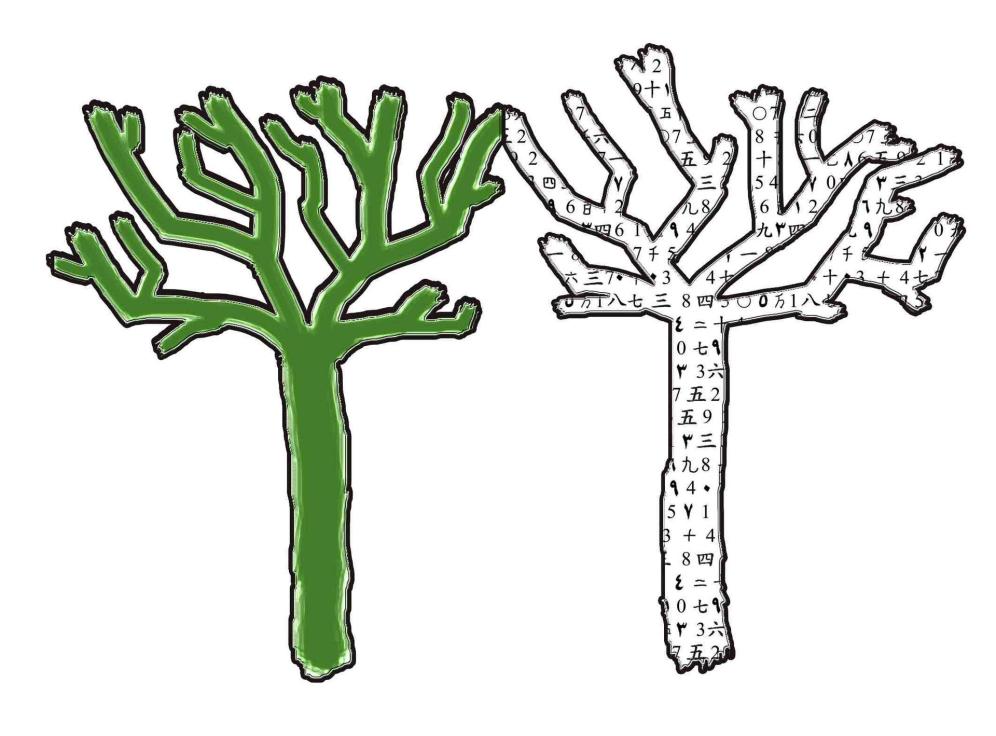

Bridging the Gap Between Academia and Industry

It is widely believed both in the academia and the industry that the research-practice gap is so universal and difficult to overcome. It is also widely accepted that academic research and industry practice can gain significantly from each other. Yes!mathematical models have infinite possibilities and applications in the industry.

- There is a huge gap between research and practice. The gap is real, but it can be bridged.

- To bridge the gap we need a new kind of practitioner: the translational developer. Read More.

The gap may be deliberate or accidental and extends to professional societies. In computing, most quoted challenges in bridging research-practice gap include:

- the incompatibility in timescales of research and practice

- research usually not seen as relevant for practice

- research demands a different kind of rigour than practice supports, and

- knowledge and skill sets required for research differ from that of practice.

What then can we do to bridge this gap? How can the knowledge contained in the academic society be brought to businesses? How can business and product feedbacks be utilised in the academic society to optimised existing techniques and tools or develop new ones?

These are questions being addressed in this blog, the specific interest here is on academic-industry collaboration with specific focus on our approach in teaching and practice of big data and machine learning.

This hub will offer the best, most comprehensive research and industry solutions to problems that matters to the society.